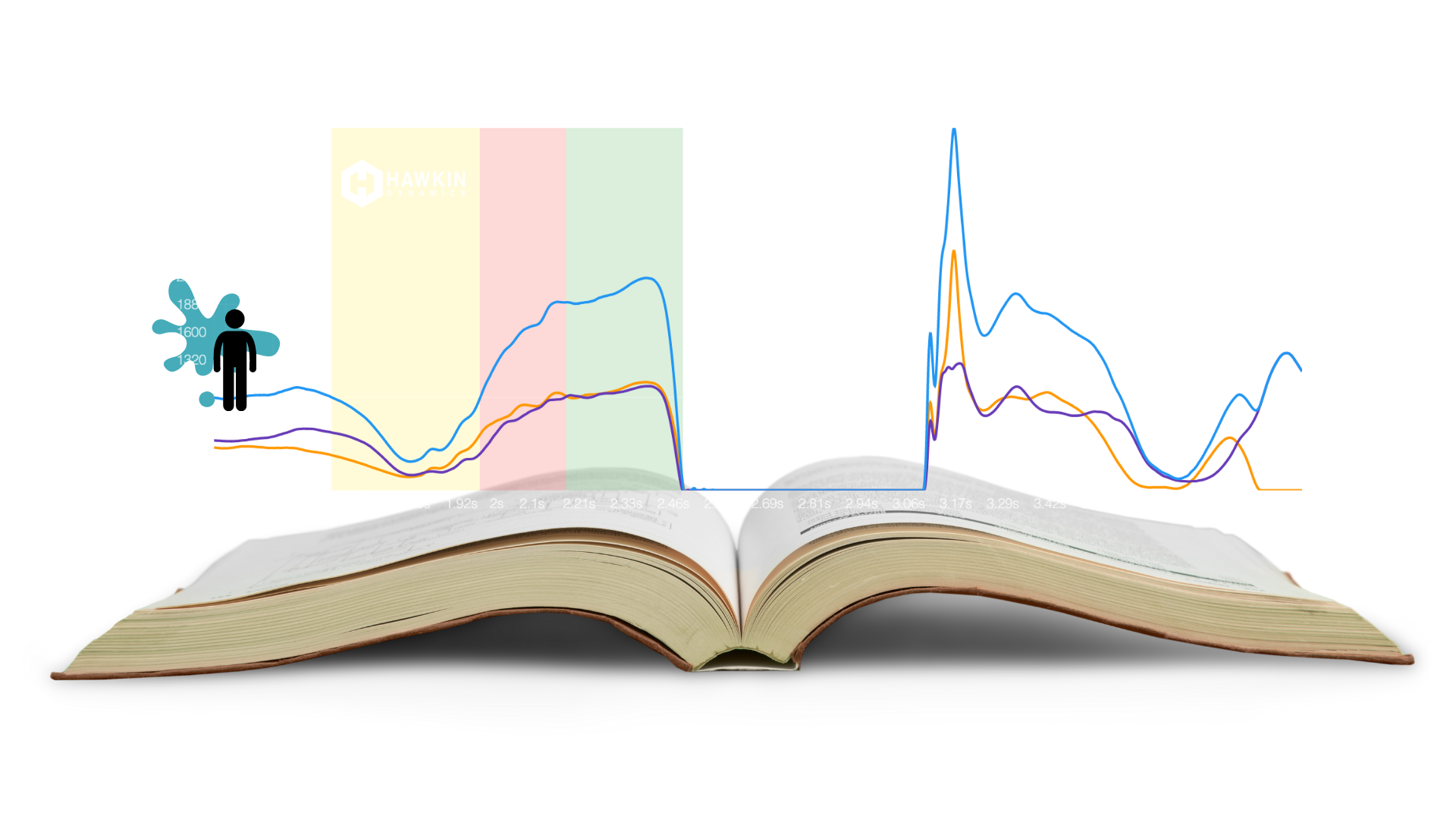

Every force-time curve can tell a story.

It’s true.

If you’ve used a standardized testing protocol, each force-time curve can provide interesting, exciting, and, most importantly, useful, information about the neuromuscular capacity of your athletes.

This article will elaborate on an idea that I was lucky enough to present at the recent Middlesex University Strength and Conditioning Student Conference on 7th March 2020. This is an awesome annual event that Chris Bishop (yeah, ‘asymmetry Chris’) organizes that provides amazing value for money, learning, and networking opportunities (lots of biscuits too – that’s ‘cookies’ to those on the other side of the pond).

In the interests of full disclosure, I want to clarify: I didn’t have this idea by myself. Instead, it’s the product of many discussions with John McMahon (and others) about this kind of thing. If you’re interested in studying or understanding jump force-time data and you’re not familiar with John’s work, then I suggest you remedy that. Sharpish too.

If any of you follow either me or Hawkin Dynamics on social media, or if you tuned in for the Inaugural Talkin' Force Hawkin Dynamics Education Webinar earlier this week (week beginning 9th March 2020) you may have come across this. Either way, I think it has the potential to be very useful.

So, the ODS system...

For simplicity, I’m going to use the countermovement vertical jump (CMJ) as the task of interest, but you can apply this system to any ground-based task.

HD Sport Science Note: This system was previously called the "ODSF System" however, has now been modified to the "ODS System" for selecting force-time metrics.

‘O’ is for 'Output'

While they might not necessarily be important, or indeed relevant, for all ground-based tests, output metrics can be used to provide immediate feedback to you and your athletes. The metric you choose should be easy for your athletes to grasp and it should give you both an idea of whether they’ve improved since the last test.

Whether they have improved or not isn’t necessarily a deal-breaker but that’s a discussion for another blog (also covered under S and somewhat in the last blog post).

An obvious example of the CMJ is jump height. Have anyone perform a CMJ and they’ll want to know how high they jumped. Combine that with the competitive element that develops naturally when testing a group and this can really help maximize their effort.

Other examples of potentially useful output metrics can include take-off velocity, peak velocity, mean velocity, and jump momentum (take-off velocity × jumper mass – another idea from John McMahon). You could even make a case for metrics like peak and mean power as they occur because of the D and S metrics, but I have more to say about these later.

So, choose your output metric(s) with a degree of sports specificity and relevance to your athletes in mind before moving on to the next one...

‘D’ is for ‘Driver'

For me, this is where things can start to get interesting. This part enables us to really get into what underpins the athlete’s performance. It can help us identify what they need to work on as we start thinking about how they might improve specific elements of their neuromuscular capacity.

These blog posts have focused on force-time data, so I think the most obvious examples are the real drivers of any movement: force – how hard the athlete pushes or pulls against the ground, a sports implement, or an opponent during any task. For the CMJ, we might want to consider braking phase (the negative displacement-positive acceleration down sub-phase) mean force, maybe propulsion phase (the up phase that includes positive displacement-positive acceleration, and positive displacement-negative acceleration sub-phases) mean force too. Using the force at the lowest displacement could provide insight into how the athlete’s down-phase performance contributes to their up-phase performance and output variable(s).

An argument could be made to include impulse here, but if we report net mean force, we can combine this with one of our strategy metrics to calculate impulse. Additionally, if you choose take-off velocity for an output metric then impulse can be calculated by multiplying this by the jumper’s mass.

Depending on the phase or phases you’re interested in, you should choose anything from one to three driver metrics - what drives the task?

.png?width=1920&name=story%20(2).png)

‘S’ is for ‘Strategy'

When combined with driver metrics, strategy metrics enable us to effectively study and explain athlete performance. We know now that impulse drives movement and from a mechanical perspective is perfectly related to take-off velocity and jump height.

How did the athlete arrive at the output metric?

Where we use propulsion phase mean force as a driver metric, we could use propulsion phase duration as its accompanying strategy metric. This is because the time force is applied for provides insight into the jumper’s strategy.

So, whenever we report mean force from a phase of interest to describe a movement driver, I would recommend that we also consider phase duration to help explain its strategy. It might also be worth considering total movement time (also referred to as time to take-off and contact time). With this, we can consider the relative contribution of each phase’s duration to the total movement duration.

Finally, for this part of the system, I think it can be useful to consider metrics like countermovement displacement (dip depth) and propulsion phase displacement (lowest squat position to take-off position). With phase duration(s), these can help provide a detailed picture of movement strategy. It can also form the basis for the selection of metrics from our final part of the system.

Let’s briefly consider another common athlete assessment task - the isometric mid-thigh pull. Probably the most important reason for using this kind of test is that it enables us to consider how the athlete applies force over time. As mentioned in previous posts, this is key because most sports performances are constrained by time. Therefore, the force and time metrics that are often used (e.g. force at 100 ms) provide a perfect example of the ODSF system in practice (obviously, I’m not trying to lay claim to this - this is the product of an awesome body of research).

‘F’ is for ‘Fluffy'

HD Sport Science Note: 'F' has since been omitted from the title "ODS System". We still believe it's important to keep this section in here for insight on our history, and evolution as a company. It's our mission to better help our customers understand and report force-time metrics more effectively.

Some have taken this fourth category title personally. Please believe me when I say that there is nothing personal about this. My only motivation for bringing attention to this element (and the associated metrics) is to push practitioners and researchers to take real ownership of the data they use to quantify neuromuscular capacity. To understand it and take responsibility for how it’s calculated. I think that this is incredibly important.

As a field, we must remember that people watch what we do. While we have an obligation to those we work with to use the most scientifically robust metrics to explain performance, we must also be aware that our work can impact those at the beginning of their practitioner/researcher journey. Let’s set as good an example as we can.

To get to the point though, I feel that fluffy metrics describe the kind of metrics that are used because we’ve always reported them. I think that being able to rationalize the use of a metric based on our understanding is an incredibly important part of what should be a transparent approach to this kind of work. Without transparency (and there will be a blog post on this) how on earth can others take our work seriously and use it to inform their work?

So, fluffy metrics. For my money, and I appreciate that this may be met with a certain amount of resistance, these include metrics like peak power, mean power, and rate of force development (RFD). There are many others, but this is a good start.

Can these metrics be useful? Absolutely!

However, it is our responsibility to ensure we know what they represent.

As a field, we’re well aware of the numerous issues that surround the calculation of RFD during ballistic tasks like the CMJ. However, we’re very light on solutions and instead seem determined to keep using it.

Do me a favor, please stop this!

It sets a terrible example and makes zero sense. Understand how force-time data is processed to calculate this kind of metric, understand the issues with these processes. Consider that a lot of the published peak RFD data are more than likely signal noise. Crazy right? Please, and I really mean this, prove me wrong.

What about power then? Well, potential issue number one: if we collect our force-time data at 1000 Hz (1000 samples second) then peak power will represent the rate of work performed over 1 ms. That’s right, one-thousandth of a second! Can this metric be related to other, important elements of athlete performance? Yes, it can. So what though? It’s not a driver so from a train-ability perspective I’d argue that it receives far too much attention. After all, rather than being interested in it, we should instead focus on metrics that we can train to improve athlete performance.

Is this the case for mean power too? Not necessarily. It can have a place, but only, and I repeat only, if both the time the work is performed in and the range of motion the work is performed over is considered. If not, what’s the point? And I really mean that. So, mean power can be promoted to be a useful output metric, but only if driver and strategy metrics are chosen carefully to support it.

Remember that ultimately, it’s these metrics that we’ll be trying to improve with training.

Are we done?

Well yes, we are (for now). Let me summarize…

When it comes to quantifying your athlete’s performance with force-time metrics, do the following using the ODS system…

- Choose one, maybe two, output metrics - what is an obvious performance output?

- Support these with one to three driver metrics - what drives the task?

- Try to identify one to three strategy metrics - what strategy is your athlete using (knowingly or otherwise)?

- Are fluffy metrics needed? If so, how? How will these help support your assessment? If, and this is an important if, you have a scientifically robust rationale for including fluffy metrics then choose one to three.

As always, please hit me up with any feedback or questions.

The inspiration for this framework was first published in this paper here, by Chris Bishop et al., 2021.

Many conversations with Dr. John McMahon also helped establish this framework.

-2.png?width=156&height=60&name=Hawkin%20Logo%20(2)-2.png)

-1.png?width=155&height=60&name=WHITE.Horizontal_Logo.Transparent%20(3)-1.png)

.png?width=1920&name=story%20(1).png)